Logistic regression

Inference

Prof. Maria Tackett

Risk of coronary heart disease

This dataset is from an ongoing cardiovascular study on residents of the town of Framingham, Massachusetts. We want to examine the relationship between various health characteristics and the risk of having heart disease in the next 10 years.

high_risk: 1 = High risk, 0 = Not high risk

age: Age at exam time (in years)

education: 1 = Some High School; 2 = High School or GED; 3 = Some College or Vocational School; 4 = College

currentSmoker: 0 = nonsmoker; 1 = smoker

Modeling risk of coronary heart disease

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

log(ˆπ1−ˆπ)=−5.385+0.073 age−0.242 ed2−0.235 ed3−0.020 ed4

Hypothesis test for βj

Hypotheses: H0:βj=0 vs Ha:βj≠0

Hypothesis test for βj

Hypotheses: H0:βj=0 vs Ha:βj≠0

Test Statistic: z=ˆβj−0SEˆβj

Hypothesis test for βj

Hypotheses: H0:βj=0 vs Ha:βj≠0

Test Statistic: z=ˆβj−0SEˆβj

P-value: P(|Z|>|z|),

where Z∼N(0,1), the Standard Normal distribution

Confidence interval for βj

We can calculate the C% confidence interval for βj as the following:

ˆβj±z∗SEˆβj

Confidence interval for βj

We can calculate the C% confidence interval for βj as the following:

ˆβj±z∗SEˆβj

This is an interval for the change in the log-odds for every one unit increase in xj.

Interpretation in terms of the odds

The change in odds for every one unit increase in xj.

exp{ˆβj±z∗SEˆβj}

Interpretation in terms of the odds

The change in odds for every one unit increase in xj.

exp{ˆβj±z∗SEˆβj}

Interpretation: We are C% confident that for every one unit increase in xj, the odds multiply by a factor of exp{ˆβj−z∗SEˆβj} to exp{ˆβj+z∗SEˆβj}, holding all else constant.

Model

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

Let's look at the coefficient for age

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

Let's look at the coefficient for age

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

Hypotheses

H0:β1=0 vs Ha:β1≠0

Let's look at the coefficient for age

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

Test statistic

z=0.0733−00.00547=13.4

Let's look at the coefficient for age

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

P-value

P(|Z|>|13.4|)≈0

Let's look at the coefficient for age

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

2 * pnorm(13.4,lower.tail = FALSE)## [1] 6.046315e-41Let's look at the coefficient for age

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

Conclusion: The p-value is very small, so we reject H0. The data provide sufficient evidence that age is a statistically significant predictor of whether someone is high risk of having heart disease, after accounting for education.

Comparing models

Log likelihood

logL=n∑i=1[yilog(ˆπi)+(1−yi)log(1−ˆπi)]

Log likelihood

logL=n∑i=1[yilog(ˆπi)+(1−yi)log(1−ˆπi)]

- Measure of how well the model fits the data

Log likelihood

logL=n∑i=1[yilog(ˆπi)+(1−yi)log(1−ˆπi)]

Measure of how well the model fits the data

Higher values of logL are better

Log likelihood

logL=n∑i=1[yilog(ˆπi)+(1−yi)log(1−ˆπi)]

Measure of how well the model fits the data

Higher values of logL are better

Deviance = −2logL

- −2logL follows a χ2 distribution with n−p−1 degrees of freedom

Comparing nested models

- Suppose there are two models:

- Reduced Model includes predictors x1,…,xq

- Full Model includes predictors x1,…,xq,xq+1,…,xp

Comparing nested models

- Suppose there are two models:

- Reduced Model includes predictors x1,…,xq

- Full Model includes predictors x1,…,xq,xq+1,…,xp

- We want to test the hypotheses

H0:βq+1=⋯=βp=0Ha: at least 1 βj is not 0

Comparing nested models

- Suppose there are two models:

- Reduced Model includes predictors x1,…,xq

- Full Model includes predictors x1,…,xq,xq+1,…,xp

We want to test the hypotheses H0:βq+1=⋯=βp=0Ha: at least 1 βj is not 0

To do so, we will use the Drop-in-deviance test (also known as the Nested Likelihood Ratio test)

Drop-in-deviance test

Hypotheses: H0:βq+1=⋯=βp=0Ha: at least 1 βj is not 0

Drop-in-deviance test

Hypotheses: H0:βq+1=⋯=βp=0Ha: at least 1 βj is not 0

Test Statistic: G=(−2logLreduced)−(−2logLfull)

Drop-in-deviance test

Hypotheses: H0:βq+1=⋯=βp=0Ha: at least 1 βj is not 0

Test Statistic: G=(−2logLreduced)−(−2logLfull)

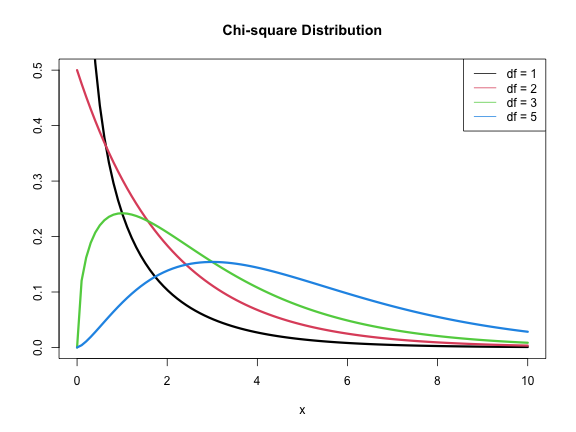

P-value: P(χ2>G),

calculated using a χ2 distribution with degrees of freedom equal to the difference in the number of parameters in the full and reduced models

χ2 distribution

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 | -5.995 | -4.788 |

| age | 0.073 | 0.005 | 13.385 | 0.000 | 0.063 | 0.084 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 | -0.463 | -0.024 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 | -0.501 | 0.023 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 | -0.317 | 0.266 |

Should we add currentSmoker to this model?

Should we add currentSmoker to the model?

model_reduced <- glm(high_risk ~ age + education, data = heart, family = "binomial")model_full <- glm(high_risk ~ age + education + currentSmoker, data = heart, family = "binomial")Should we add currentSmoker to the model?

# Calculate deviance for each model(dev_reduced <- glance(model_reduced)$deviance)## [1] 3300.135(dev_full <- glance(model_full)$deviance)## [1] 3279.359Should we add currentSmoker to the model?

# Calculate deviance for each model(dev_reduced <- glance(model_reduced)$deviance)## [1] 3300.135(dev_full <- glance(model_full)$deviance)## [1] 3279.359# Drop-in-deviance test statistic(test_stat <- dev_reduced - dev_full)## [1] 20.77589Should we add currentSmoker to the model?

# p-value#1 = number of new model terms in model 2pchisq(test_stat, 1, lower.tail = FALSE)## [1] 5.162887e-06Should we add currentSmoker to the model?

# p-value#1 = number of new model terms in model 2pchisq(test_stat, 1, lower.tail = FALSE)## [1] 5.162887e-06Conclusion: The p-value is very small, so we reject H0. The data provide sufficient evidence that the coefficient of currentSmoker is not equal to 0. Therefore, we should add it to the model.

Drop-in-Deviance test in R

We can use the anova function to conduct this test

- Add

test = "Chisq"to conduct the drop-in-deviance test

anova(model_reduced, model_full, test = "Chisq") %>% tidy()## # A tibble: 2 x 5## Resid..Df Resid..Dev df Deviance p.value## <dbl> <dbl> <dbl> <dbl> <dbl>## 1 4130 3300. NA NA NA ## 2 4129 3279. 1 20.8 0.00000516Model selection

Use AIC or BIC for model selection

AIC=−2∗logL−nlog(n)+2(p+1)BIC=−2∗logL−nlog(n)+log(n)×(p+1)

AIC from glance function

Let's look at the AIC for the model that includes age, education, and currentSmoker

glance(model_full)$AIC## [1] 3291.359AIC from glance function

Let's look at the AIC for the model that includes age, education, and currentSmoker

glance(model_full)$AIC## [1] 3291.359Calculating AIC

- 2 * glance(model_full)$logLik + 2 * (5 + 1)## [1] 3291.359Comparing the models using AIC

Let's compare the full and reduced models using AIC.

glance(model_reduced)$AIC## [1] 3310.135glance(model_full)$AIC## [1] 3291.359Based on AIC, which model would you choose?

Comparing the models using BIC

Let's compare the full and reduced models using BIC

glance(model_reduced)$BIC## [1] 3341.772glance(model_full)$BIC## [1] 3329.323Based on BIC, which model would you choose?